You’d have to be young or have millennials as children (I do) to have heard of the rock band Rage Against the Machine, popular enough to have entered balloting for the Rock and Roll Hall of Fame this year. Medical underwriting (and underwriting in general) has its own version of “rage against the machine”: Increasingly, computer templates are making binding underwriting decisions through information that is programmed in with final decisions virtually unappealable. It’s a frustrating situation that is taking the human element out of a very human situation of health and risk assessment.

The equally famous: “Just the facts, ma’am” (Jack Webb, Dragnet) is what is going into final premium determinations for many insurance products. Low margin term insurance and long term care riders are at the forefront of this. A decision is made, and the agent or broker is essentially informed in advance there will be no appeals, oral, written or otherwise. The problems however arise from the information that goes into the machine—or just as likely—what doesn’t go in. Sometimes it’s an error, sometimes a misjudgment, sometimes an omission, and often a critical piece of information for which there is simply no category in which it can be input. Either way, the picture is not a true one and its accuracy is correspondingly limited.

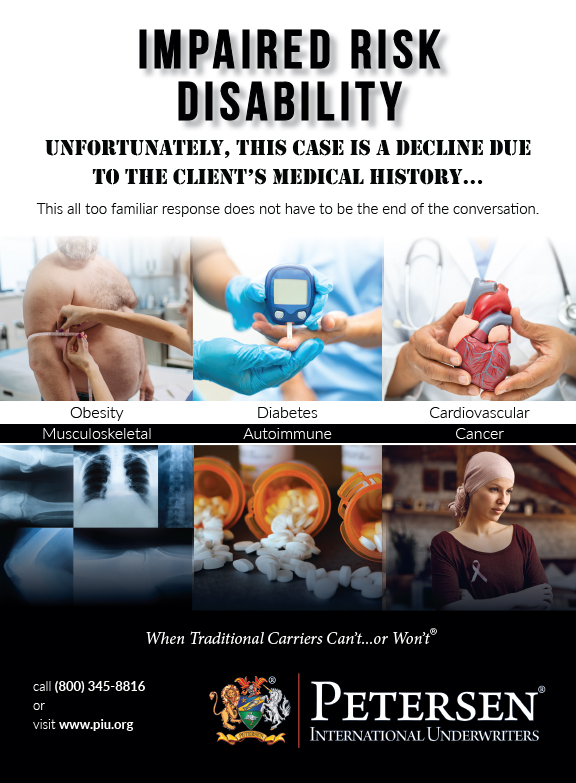

Much underwriting experience data is segregated by disease entity, and of course no two individuals express the same disease equally nor have it with the same severity. Entering the degree of disease or severity of aggressiveness is often a subjective judgment. In a connective tissue disease, a determination between mild and moderate, or moderate and severe, can mean a complete difference in underwriting classification. Sometimes there are objective measurements (in a disease affecting kidneys there are lab measurements that are quite accurate in determining how severe the disease is). In just as many other cases (sometimes judged only by symptoms) the correlation does not mirror the ultimate morbidity of mortality. You know how the client looks and functions, the machine does not, and in a difference of opinion it’s obvious who the victor is.

Misclassification is another area where the automated system fails, or likely it is the in-putter that fails the system. Either way, the result is inaccurate. Objective signs such as a pathology report of a cancer or direct lab correlation of control of diabetes are clear-cut. In rheumatologic or even neurologic disease, much less so. A challenge can persist when assessment is not made to the best of actual ability—when an attending physician can step in and make the most accurate call on his or her patient. In non-appealable cases, that ability is lost.

Dates are key in assessment of rating for individual policies. There are definite and stringent divisions, particularly in cancer ratings, of what an individual rating will be after a defined waiting period. When dates are even a little off, relative to dates of treatment, the rating can change or even a declination can exist when a policy could otherwise be placed. Not the machine’s fault in this instance—here the rage is more accurately focused on the hands of the in-putter.

Medication lists are a source of action on the part of the automated program that are certainly left open to interpretation and often modified by doctor prescribing habits for which no allowance can be made by the program. Particularly in long term care underwriting, certain meds are “rule-outs” for consideration from the beginning. There are many cases however where a doctor uses an off-label use for a medicine or tries it for a use of its side effects for a patient condition not associated with the disease the program feels is an uninsurable one. In fact, so many medications nowadays are given not for the conditions for which they were originally developed (antidepressants for nerve pain or sleep or bladder problems, or anticancer medications for GI and rheumatologic disease as an immunosuppressant) that the rule-out doesn’t hold at all. When a decision is not appealable, all those cases fall by the wayside for the wrong reasons.

One other area the machine fails is in incorporation of other factors necessary for accurate risk assessment that are not included in the equation. This is displaced rage against the machine, as there is truly no way the automated decision can include factors of which it has no knowledge. Everything about the individual you know, such as activity, social involvement, activities of daily living, chronicity of disease and how well the individual copes and compensates for it. These are positives that tip a situation from gray to black and white, but that the program decides on without the benefit or consideration of same.

The trend to newer products that are lower margin, more easily underwritten without the hands-on of an underwriter, lower cost in providing and administering, and more competition friendly (where lower costs are passed on to consumers and spread-sheeted more competitively) make the process one where it works for the healthy (lowest cost), and markedly impaired risk (declined while limiting acquisition and administration costs) but falls short at the shades of gray in between. The appeal of such cases can’t be lost or the rage against the machine will lead brokers and agents to places where their voices can be heard.